Today, EMC announced the General Availability of XtremIO, their purpose-built all-Flash array. The announcement comes amid an unprecedented storm of pre-launch hyperbole from several vendors (as one might expect, very much “pro-” from EMC and rather “anti-” from some of the other all-Flash vendors).

Today, EMC announced the General Availability of XtremIO, their purpose-built all-Flash array. The announcement comes amid an unprecedented storm of pre-launch hyperbole from several vendors (as one might expect, very much “pro-” from EMC and rather “anti-” from some of the other all-Flash vendors).

In this (somewhat lengthy) post, I’ll walk through an introduction to XtremIO, its hardware, its operating system, and its built-in software features. I’ll also address XtremIO’s scalability and performance.

What Is XtremIO?

XtremIO was an Israeli-based startup that EMC acquired in May of 2012. They created a designed-from-the-ground-up all-Flash storage array based on a scale-out architecture. This array has the same name as the former company, XtremIO.

The array has been functional since the acquisition – I saw my first XtremIO demo in a briefing at EMC World 2012 – but it hasn’t been made available to the general customer base until today. This delay has fueled a lot of speculation outside EMC about the wisdom of the decision to acquire XtremIO. I think seeing the full feature-set out in the open will lay any doubts to rest.

X-Bricks

The basic building block of the XtremIO array is the X-Brick. Each X-Brick consists of:

The basic building block of the XtremIO array is the X-Brick. Each X-Brick consists of:

- One 2U disk shelf containing 25 eMLC SSDs, giving 10TB of raw capacity (7.5TB of this is usable)

- Two 1U controllers

- One (or two) 1U Battery Backup Units

(a configuration with only one X-Brick comes with two (for redundancy) and each additional X-Brick in the cluster comes with one)

The controllers handle all I/O to and from the SSDs and are configured with true active/active access, not ALUA. (ALUA is “Asymetric Logical Unit Access” which is effectively storage array sleight-of-hand emulation of active/active access.)

Each controller has:

- 256GB RAM

- Two 8Gbps host-facing FC ports

- Two 10GbE host-facing iSCSI ports

- One 1GbE management port

- Two Infiniband ports for connecting to the RDMA Fabric back-end connecting X-Bricks to each other

The controllers all built from all commodity hardware. They contain no custom FPGAs, ASICs, Flash modules, or firmware. The magic of XtremIO comes from its RDMA Fabric and its software.

RDMA? What’s That?

RDMA stands for “Remote Direct Memory Access”. It’s a computer-to-computer communication technology that enables a network adapter to transfer data directly from the memory of one device to the memory of another without actually involving either device’s operating system. As you might imagine, this can occur at incredibly high speeds, thus the requirement for the back-end Infiniband connections to avoid introducing latency.

RDMA is most often seen being used as an interconnect technology in some high-performance computing (HPC) clusters, where it’s used to very rapidly get the contents of memory to a large number of CPUs for processing. I’ll go into more detail on how XtremIO uses RDMA when I describe the XIOS operating system below.

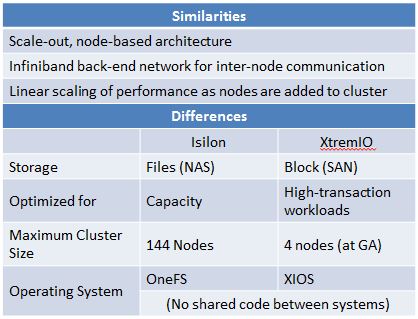

Comparisons to Isilon

Given the scale-out and node-based architecture of both XtremIO and Isilon, it’s inevitable that people will draw comparisons between the two platforms. I’ll summarize in the table below:

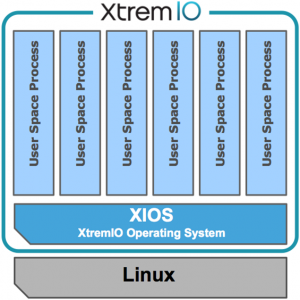

XIOS – The XtremIO Operating System

The heart of the XtremIO’s power is XIOS, the XtremIO Operating System. With a Linux kernel at its core, XIOS is designed to be multi-socket and multicore aware. The OS is extremely hyperthreaded (similar to the new VNX’s MCx architecture) and is designed to give maximum memory space to the I/O processes, allowing it to handle hundreds of thousands of concurrent I/O threads.

Content-Based Data Placement

The key to the XtremIO’s performance is the data layout and management used by XIOS. EMC calls this Content-Based Data Placement. It works something like this:

- Data comes into the XtremIO

- Each incoming 4KB block of data gets “fingerprinted”, giving it a unique hexidecimal ID

- This unique ID serves two purposes:

- The identification of duplicate blocks (I’ll go into this in more detail below)

- Act as an address for the block

- Using the unique ID, all blocks are randomly distributed across all drives in all X-Bricks in the cluster (i.e.: all IDs starting with “0” are sent to a specific controller, all IDs starting with “1” to another, etc.)

- Only unique blocks are written to SSD – if a block is a duplicate of one already stored on the XtremIO cluster, a metadata pointer is created and no additional write to SSDs occurs

- Each block’s metadata stays in memory – all the time (this is where that RDMA fabric comes into play)

This data distribution mechanism ensures that all data is distributed evenly across all drives. In testing data loads, EMC showed only a 0.16% variation in the amount of data stored on each drive.

This data-placement method means a number of things:

- All LUNs are spread across all Flash drives in all nodes concurrently

- All LUNs get the full performance of all Flash drives

- All LUNs get very predictable performance

- The cluster performs pretty much the same whether it’s nearly empty or nearly full.

I’m not sure if it’s by design or a happy coincidental side-effect, but this data distribution method also increases drive endurance and lifetime, as it minimizes the number of writes and rewrites to any given cell within an SSD.

All metadata remaining resident in memory all the time gives huge performance boosts. Lookups happen incredibly rapidly, with near-zero latency, and copying or cloning data is simply an in-memory metadata update.

Built-in Software Features

There are four software features built-in to XIOS that I find particularly interesting. I’ll walk through each of them below.

Thin Provisioning

While almost every storage platform available today offers an option for thin provisioning, XtremIO ends up being thinner than most.

By taking advantage of the content-based data placement methodology described above, every LUN on an XtremIO cluster is automatically thin-provisioned. There’s no need to pre-allocate some storage or set it to auto-add extents if it need them. XtremIO only allocates storage on an as-needed basis, one unique 4KB block at a time…

Inline Data Reduction

As described above, as data is written to the XtremIO, each 4KB block is digitally fingerprinted, giving it a unique ID. Each unique block is written to SSD only once. Any duplicate instances of a given block simply cause an in-memory metadata update.

How much capacity-reduction any given customer will see from this feature is entirely dependent upon the particular data set being stored, but EMC says customers are seeing up to a 5:1 data reduction ratio. This means that an individual X-Brick’s 7.5TB of usable space could effectively store up to 35TB of data…

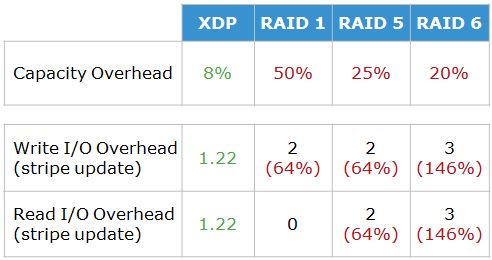

XDP

XDP stands for XtremIO Data Protection and is what XIOS uses instead of RAID to protect against SSD failure. It’s different in its approach to protecting the data, and accomplishes the job with a lower space overhead.

RAID writes full stripes across drives. XDP has been optimized to write partial stripes of unique 4KB blocks. This allows the system to perform at the same level whether it’s nearly empty or nearly full.

XDP requires no dedicated hot spares. In the event of an SSD failure, it uses a distributed rebuild process to recreate the missing data on the free space of all the remaining drives. Due to XtremIO’s thin provisioning, only actual data blocks need to be recreated – no need to rebuild empty space as can happen in a drive rebuild under traditional RAID protection.

XDP allows the XtremIO cluster to withstand a failure of up to six (6) SSD drives within a single X-Brick. It accomplishes this with only an 8% capacity overhead. XDP’s read and write I/O overhead also compares quite favorably with that of traditional RAID, as can be seen in the table below.

VAAI Support

I’ve always been a huge fan of VMware vSphere’s VAAI (vSphere Storage APIs for Array Integration).

XtremIO’s method of keeping metadata resident in memory always means that that it responds at the VAAI X-COPY command with blinding speed. X-COPY is used when vSphere wants to copy or clone a VMDK file on an array that supports VAAI. Instead of vSphere needing to read the entire file and then re-write the entire file, vSphere instructs the storage to make a copy on vSphere’s behalf. For some storage this means taking advantage of native snapshot utilities. For XtremIO, this means an in-memory metadata update and no writing of any additional blocks to disk at all…

Scalability

Today, an XtremIO cluster can scale from one to four 10TB X-Bricks. EMC describes this as scaling from 10 to 40TB of raw SSD capacity, but remember that you can only add X-Brick increments of storage, so think of it as having a choice of either 10, 20, 30, or 40TB of raw capacity.

EMC has announced plans to launch a higher-capacity X-Brick in Q1 of 2014. These X-Bricks will use larger SSDs and will have 20TB of raw capacity each. Again, EMC will describe this as scaling from 20 to 80TB of raw capacity, but think of it as having a choice of either 20, 40, 60, or 80TB of raw capacity.

The current X-Bricks and the future higher-capacity ones cannot be mixed. An XtremIO cluster is limited to X-Bricks of identical capacity.

While in the initial launch, an XtremIO cluster is limited to a maximum of four X-Bricks, there is no theoretical or architectural limit to the size of a cluster. (Although you would, in practice, be limited by the number of ports available on your Infiniband switches.) EMC has stated that the next generation of XtremIO will scale to 8 X-Bricks.

EMC is likely to make higher scaling options available in the future if there is sufficient market demand for larger clusters.

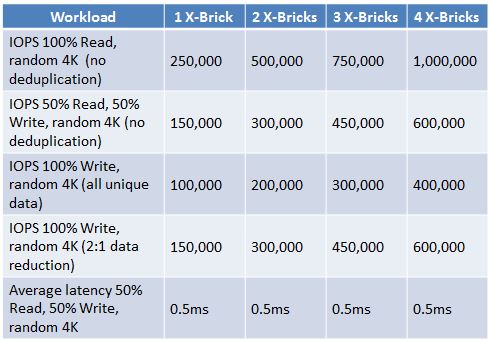

Performance

The XtremIO performance numbers are nothing short of impressive. More so when you understand how performance was measured:

- All performance (including latency) was measured end-to-end from the host

- Workloads used were 100% random

- Different mixes (100% reads, 100% writes, 50/50) were all tested

- Tests were performed with the cluster filled to 80% capacity

- Tests were run over extended time periods

How did XtremIO perform? Check the numbers in the table below:

Availability

The 10TB X-Brick version of XtremIO is generally available now. The 20TB X-Brick version will be available in Q1 of 2014.

VCE recently announced plans to release a Vblock based upon XtremIO before the end of the year. I anticipate their announcing the general availability of that model Vblock shortly.

Pingback: Building SAN Infrastructure, Brick by Brick #XtremIO | I Tech, Therefore I Am

Pingback: Collection of XtremIO Resources | Eck Tech

Pingback: EMC Announces Upgrades to XtremIO All-Flash Array | GeekFluent

EMC can only actually do configurations of 1,2 or 4 X-Bricks. A firmware limitation prevents a 3 brick configuration. Apparently this is going to be addressed some time in 2015 but as it stands now (Q4 2014) it is a limitation. Also, at this time, adding an additional X-Brick (or 2) to an XtremeIO array means a full destructive upgrade. You got it kids, back everything up and restore.

You’re partly correct. At release, the XtremiIO could only do 1, 2, or 4 X-Brick configs (the 3 X-brick config was a mistaken mention on my part). With updates announced in July, The XtremIO can be installed as 1, 2, 4, or 6 X-bricks.

Currently, the upgrades are destructive, as you mentioned. I’ve talked to some XtremIO customers and — to my surprise — they seem far less concerned about that than I’d have expected. Stephen Foskett did a good job summarizing reaction to the destructive upgrade announcement in his post titled “XtremIO Upgrade is Non-Disruptive to Customers“.

Pingback: State of the Blog Report: 2015 | GeekFluent