Today, Avere Systems announced its first complete storage solution. Avere is known for their Edge Filers that virtualize NAS systems and/or act as a file-access gateway to object storage.

Today, Avere Systems announced its first complete storage solution. Avere is known for their Edge Filers that virtualize NAS systems and/or act as a file-access gateway to object storage.

The just-announced C2N solution combines Avere FXT Edge Filers with Avere’s own new scale-out object storage platform. This combination allows the C2N to be used as a standalone storage solution, while still using the FXT as to virtualize other NAS devices and as a gateway to other object storage platforms — either on- or off-premises. I’ll walk through the solution below.

What Was Announced

The C2N solution’s name “C2N” is taken from the things it does, “Cloud, Core, NAS”. It consists of three FXT nodes combined with a minimum of three new CX200 storage nodes. (I asked — any similarity between this model number and that of an older Data General/EMC storage platform is purely coincidental.)

The Cx200 nodes are 1U storage servers, each with 12 hot-swappable 10TB HDDs, giving 120TB of raw storage capacity per node. The amount of usable capacity depends on the data protection scheme chosen. I go into the C2N’s data protection options in more detail in the next section below.

The CX200 nodes run the SwiftStack software to coordinate them into a pool of object storage. The FXT Edge Filers act as a front-end to the pool of object storage, presenting it has either NFSv3, SMB1, or SMB2 (or as all three simultaneously to different client-hosts).

The CX200 nodes run the SwiftStack software to coordinate them into a pool of object storage. The FXT Edge Filers act as a front-end to the pool of object storage, presenting it has either NFSv3, SMB1, or SMB2 (or as all three simultaneously to different client-hosts).

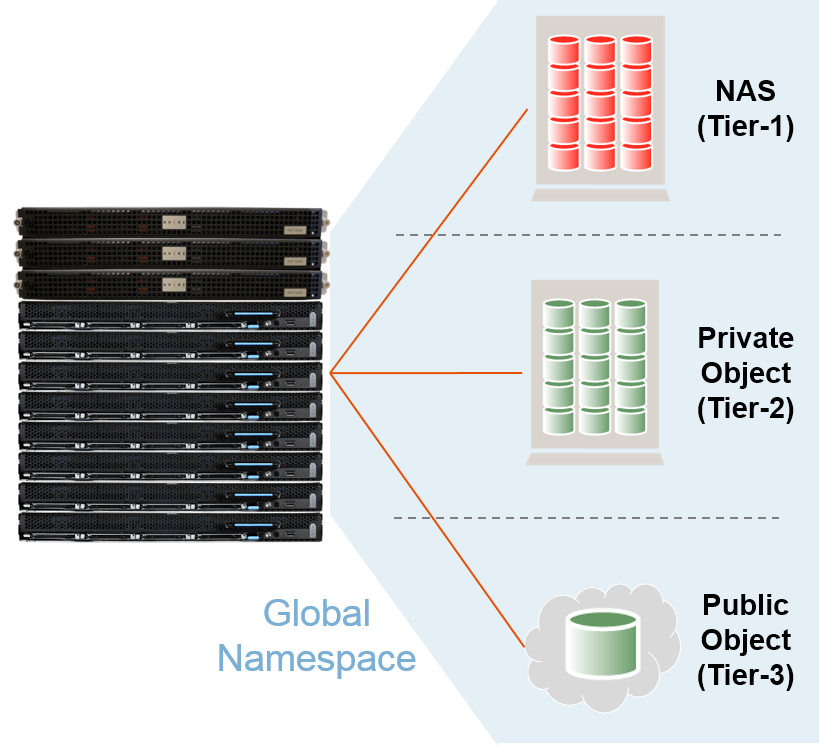

The FXT Edge Filers can also act as a gateway to public cloud storage (Amazon and Google are supported today with MS Azure on the roadmap). While working with the CX200 nodes, they can also virtualize other vendors’ NAS platforms and work with other on-premises object storage, all within a single, Avere-provided, global namespace. A logical representation of this functionality can be seen in the diagram below.

Lastly, not mentioned in the press release, the C2N solution also includes a 1U MX100 management node. One MX100 is required, although customers have the option of getting two for high availability.

Data Protection

The Cx200 nodes in the C2N solution offer two options for data protection.

The first option is Triple Replication. This is pretty basic and is pretty much just what it sounds like: there are three copies of all data fragments, each stored on a different node (thus the three-node minimum). In the scheme, the usable capacity of each CX200 node is 40TB, and up to two nodes can fail and data is still accessible.

The second option is Erasure Coding. This requires a minimum of six CX200 nodes. In this case, each object is re-written as a group of “data fragments” and “parity fragments” each of which are stored on different nodes. In a twelve-node configuration, you could set the scheme for 8+4 protection, giving you 8 data fragments and 4 parity fragments, one fragment stored on each of the 12 nodes. With this scheme up to four of the nodes could fail and the C2N could still serve the object to clients.

The Erasure Coding scheme is, obviously, more space-efficient than Triple Replication. Using an 8+5 Erasure Coding scheme, the usable capacity of each CX200 node is 75TB.

The C2N can expand to a maximum of 72 Cx200 nodes. Actually, it could likely expand to a greater number of nodes, but 72 nodes was the maximum number that Avere tested with the current software release. Why 72 nodes? Because (72 Cx200 nodes) + (3 FXT Edge Filers) + (2 MX100 Management nodes) + (the top-of-rack network switches) is just enough equipment to fill two standard 40U data center racks. This maximum configuration, using the 8+5 Erasure Coding scheme, would give 5.4PB of usable capacity.

Additional Resiliency Options

Like other scale-out object storage solutions, CX200 nodes in the C2N solution can be geo-dispersed (a fancy technical term meaning “installed in separate physical locations”). Today, Avere supports exactly three separate locations (no more and no less) for geo-dispersion.

With this, Avere has two separate concepts: Multi-Zone and Multi-Region.

Multi-Zone

Each of the three locations must meet the following requirements for a Multi-Zone high-availability configuration:

- A minimum of 10Gbps interconnect between sites

- A maximum of 5ms latency across those interconnects

This means that the Multi-Zone configuration is most likely going to be used with separate buildings on the same campus, or with separate location in the same metropolitan region.

If the Zone requirements are meet, the CX200 nodes can be spread across the three locations and use the Erasure Coding data protection scheme. In this set-up an entire Zone (location) can fail, plus an additional node at one of the other two locations, and the C2N can still serve its data to clients.

Multi-Region

A Multi-Region set-up is what you’d want to use if you want greater protection against outages, as Multi-Region doesn’t have the strict latency requirements of Multi-Zone, allowing for greater distances between locations, e.g.: Boston, London, and Tokyo.

The drawback of this is that a Multi-Region configuration only supports the Triple Replication data protection scheme. So, the C2N will have a lower space efficiency, but a higher resiliency.

In a Multi-Region set-up, an entire Region (location) can fail, plus an additional node at one of the other two locations, with no affect on overall uptime. Further two entire Regions can fail, and data can still be served from the third location.

Availability

The C2N solution has been in a Directed Availability program and installed at customer sites since May of this year.

According to Avere’s press release, the C2N is available for ordering now in North America, and 90 days from now in the rest of the world, although I was told that units wouldn’t actually ship before the end of September.

The list price for a single CX200 node will start at $95,500 USD.

GeekFluent’s Thoughts

- Overall, I think this is a good move for Avere. Up until now, they’ve only offered solutions that were “add-ons” to other vendors’ storage platforms. With the introduction of the C2N, Avere is able to offer customers a complete standalone storage solution.

- For me, to really round this solution out, I’d love to see direct object access via S3 or similar protocol. Currently, due to their arrangement with SwiftStack, Avere can’t offer this. Customers could purchase SwiftStack software directly and get something resembling that functionality, but implementing that sounded complicated when I asked about it.

- Avere needs to get on the NFSv4 bandwagon sooner than later. More and more organizations are moving from NFSv3 to NFSv4 and Avere will likely see several lost opportunities until they add version 4 support.

- Object storage always seems to confuse some customers. They get the idea of file storage, but object storage as something different doesn’t always come across. I believe this has to do with the fact that the majority of “objects” that get stored are, in fact, files. (Note to self: write a blog post on this subject, explaining the similarities and differences.)

- In order for on-premises object storage to become widely adopted for any use other than archive, applications will need to become object-aware and object-capable. Today most applications read and write from either raw disk (block storage) or files (file storage). Very few read and write directly from object storage, making solutions like Avere’s FXT Edge Filers necessary.