Today, as part of their “MegaLaunch”, EMC announced updates to the Isilon scale-out NAS platform. The updates include two new hardware nodes, expanded Flash capabilities, and new data access methods.

I’ll walk through the updates below.

New Hardware

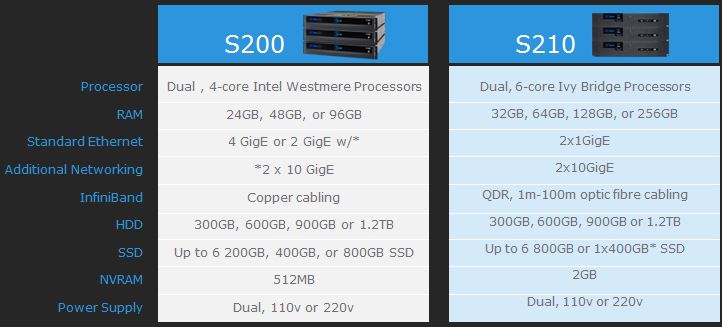

EMC announced the addition of new IOPS-performance S-node and throughput-performance X-node hardware. The new nodes are the S210 and the X410.

Comparisons between the current nodes and the new ones are in the tables below:

New Data Access

In addition to the multiple means of simultaneous data access Isilon provides today, EMC announced the addition of support for SMB 3.0 with full support for multi-channel, improving performance and availability.

Support for HDFS 2.3 and native object storage via OpenStack SWIFT are expected by the end of the year.

SmartFlash

EMC also announced a new feature called SmartFlash.

Currently, while Flash drives in Isilon nodes can be used for data storage, they rarely are. Instead, they’re used to store metadata, providing a huge performance benefit to filesytems. For example, for typical NFS mounts, up to 60% of all storage activity is GETATTR or Get Attribute calls (metadata lookups) rather than actual reads or writes of data. Having the metadata on Flash greatly accelerates these calls (especially directory walks on filesystems containing millions of files).

SmartFlash allows the use of Flash drives as additional globally-coherent cache in a cluster. Use of SmartFlash can add up to 1PB of cache to a single Isilon cluster. Compare this to the maximum of 37TB of DRAM cache available to a cluster and you can see the performance benefits. When you consider that SSDs cost one-tenth the price of similarly-sized DRAM, you can see huge cost-effectiveness as well.

Future Implications

Most folks, when looking at the new hardware specs probably focused on the increased CPU power, increased RAM, or larger NVRAM. Don’t get me wrong — those are awesome, but let’s face it, that’s table stakes for any new hardware.

Those performance-increasing specs make it easy to overlook what I think is one of the most significant changes in the new hardware — the new InfiniBand interfaces.

Current Isilon nodes have Double-Data Rate (DDR) InfiniBand interfaces that use copper cables limited to 10m in length. I know of many Isilon installations where folks have gone through some serious extra work in order to ensure that all nodes in a large cluster are in reach of the InfiniBand switches.

The new Isilon nodes have Quad-Data Rate (QDR) InfiniBand interfaces that use fibre cables that can be up to 100m long. Now, customers will be able to have nodes in the same cluster dispersed throughout the data center. No more having to shuffle hardware in racks in order to add new nodes to a cluster.

To me, though, the InfiniBand implications go farther than that to two significant ways.

First, while current Isilon nodes use DDR (20Gb/s) interfaces, they generally connect to QDR (40Gb/s) switches. Being able to take advantage of the full 40Gb/s bandwidth on the back-end should provide even more performance from the new nodes.

Second, today there are three things that limit the maximum number of nodes that can be in an Isilon cluster.

The first, of course, is OneFS code support for nodes. Since that’s software, that’s easily changed.

The second is the number of ports available on the back-end InfiniBand switches. New, larger QDR switch models are becoming available.

The third is the physical limitations imposed by the 10m back-end copper cabling limit. Increase the 10m to 100m with the fibre cables, and this limit disappears.

Add this all up, and it’s clear that the groundwork is being laid for future, larger higher-performance Isilon clusters.

Pingback: Redefine Possible: NYC | vmnick

Pingback: EMC Announces New High-Density Isilon Node – the HD400 | GeekFluent