[UPDATE: 4 September 2012: This post refers to VFCache at the time of launch and is outdated now that EMC has released VFCache 1.5. You can read my post about that release here.]

Today, EMC announced their much-anticipated server-side Flash product, Project Lightning, under its official name, VFCache.

Lots of folks are writing about VFCache and all the amazing performance-enhancing results it produces. Some are doing deep dives into how it works. Since there are plenty of places to get all that information (there are five VFCache white papers on the EMC company site), I don’t see any reason to cover that same territory in detail here. Instead, after a brief overview of VFCache, I’ll be discussing how it works in a VMware environment.

Full Disclosure: In my position as an EMC employee I’ve had access to VFCache information in advance of today’s launch. I also have access to roadmap information about planned future enhancements and expansions. Under my agreements with EMC, I am not in a position to discuss VFCache futures. I will restrict my commentary to VFCache capabilities “at launch”.

VFCache: An Introduction

VFCache is a PCIe Flash card that installs inside servers. It comes with software drivers to provide intelligent management of its cache.

You may already be familiar with EMC’s Fully Automated Storage Tiering (FAST). FAST works within the storage array and intelligently moves in-demand (hot) data to higher-performance storage tiers and less-requested (cold) data to higher-capacity tiers. It does this movement non-disruptively and in response to actual real-time demand, all managed according to user-configurable policies.

The easiest way to grasp what VFCache does is to think of it as expanding the FAST concept from the storage array over to the server. Intelligent drivers determine what data to put in the server-side Flash for fast response (before it is copied to the array) and what data to send directly to the array. Again, this happens in response to actual real-time data demand, again managed by user-configurable policies.

You might be wondering what kind of performance benefit you can get, especially since at first glance it might appear that the VFCache drivers might actually add overhead, what with their inspecting the data before writing it. It turns out that the answer is “quite stunning and amazing performance benefits”. To give you an idea, I refer you to the diagram below:

What you see in the diagram is the “access density” in IOPS/GB of the following three types of storage media (from left to right):

- Traditional Hard Disk Drive

- Solid State Flash Drive

- PCIe Flash (like VFCache)

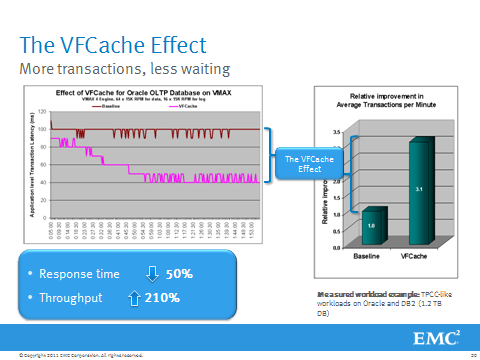

And no, you’re not misreading that. VFCache can offer up to 4,000 times better data access than HHDs. Better data access in this case translates directly to better performance as measured by increased throughput (IOPS) and decreased latency (response time). An example of this increased performance can be seen for and Oracle OLTP database workload in the diagram below:

In the graph on the left, the top line (brown) shows transaction latency without VFCache. The bottom line (purple) shows transaction latency for the identical workload with VFCache installed. It’s obvious to see that VFCache is designed with the high-performance, high-throughput application in mind. VFCache increases the performance of both reads and writes.

VFCache with VMware vSphere

VFCache can offer similar performance benefits to virtualized applications running on vSphere — there are a couple particulars about using VFCache in a VMware environment that users need to be aware of.

The first is that there are no VFCache drivers for vSphere itself. This means that the VFCache software drivers won’t be installed on ESXi directly, but will instead be installed within the Guest OS.

This means that VFCache won’t improve vSphere’s performance directly, but will improve the performance of the applications running in the Guest installed on the VM running on vSphere — which, quite frankly, is actually what you (and your users) care about.

This also means that when using VFCache in a vSphere environment, you have a lot of easy flexibility about choosing which applications will use VFCache and which won’t. Because the drivers install in the Guest, you only install them on the VMs with the greatest performance requirements. That way you’ll get the benefit where you need it most and have no worries about lower-priority workloads using space within the PCIe Flash.

The second thing to be aware of is that while the VFCache drivers don’t get installed on ESXi, there is a vCenter plug-in for VFCache that will need to be installed. The plug-in handles cache control and monitoring for the VFCache cards installed on your ESXi servers.

The last thing to be aware of in a VMware environment is that, today, VFCache does not offer support for clustering.

What does this mean? A VM using VFCache cannot be moved via vMotion while VFCache is active. For the most part, since you’d only use VFCache for your highest-performing VMs, this should cause minimal inconvenience as you’d likely run those VMs on a separate compute-tier or use policies to ensure their access to resources.

Now, you might be thinking, “Sure I can live with my highest-performing VMs not being managed by DRS (the Dynamic Resource Scheduler, which provides load-balancing by the automated movement of VMs across physical servers via vMotion), but surely you’re not asking me to choose between VFCache and vMotion — I’m going to need vMotion sometimes, like when I want to put a server into maintenance mode…”

Never fear. EMC provides recipies for scripts which will allow you to move VFCache-using VMs when you need to by automating the following steps:

- Suspend the VFCache drivers, so the VM interacts solely with the storage array for its data

- Use Storage vMotion to non-disruptively move the data on the VFCache card into the storage array.

- vMotion the VM non-disruptively to another server

- Use Storage vMotion to non-disruptively move data from the storage array to the VFCache card on the new server

- Re-enable the VFCache drivers

All this can happen with minimal effect to the VM in question. It will experience no downtime, there will simply be a brief period when it isn’t getting the additional performance benefits of VFCache.

Conclusion

VFCache offers huge performance increases for applications needing high throughput regardless of whether or not they’re running on traditional physical servers or have been virtualized. The Era of Flash — and the advantages it brings — is here to stay.

Pingback: EMC VFCache (aka “Project Lightning”) Is One Small Step, But an Important One – @SFoskett – Stephen Foskett, Pack Rat

Thanks! A straight forward, low-calorie explanation.

Thanks! Glad you found it helpful.

Pingback: Gamesmanship: Fusion-io Sold Twice as Much Flash Last Year as EMC – An Interview with CEO Dave Flynn | ServicesANGLE

Great Article Dave. Very easy to understand and get customers’ to know the value of VFCache.

Thanks!

Hi Dave

Do you know what would occur in a VM HA situation. Would the VM admin have to reconfigure VFCache for those vm’s in that situation?

Danny,

With VMware HA, in response to a server failure, I’m pretty sure that VFCache behaves in one of two ways: one of them good, and the other even better.

I’ve got questions in to the VFCache engineers to get the definitive answer. As soon as I get it, I’ll post here — just wanted to post something now to let you know that I’ve received your question and am working to get you the solid answer.

Stay tuned…

Danny,

Sorry for the delay (a combination of my and others’ travel schedules), but I now have the definitive answer from the engineers.

I need to be very clear in my answer; please interpret it as precision, not pendantry.

The current “version 1.0” of VFCache is not compatible with VMware HA. The reason for this is that VFCache presents the cache to the VM as if it were a DAS device and DAS is obviously incompatible with HA (which requires shared storage).

There are all kinds of feature improvements and additions on the way, as well as a lot more/better integration, but I’m not in a position where I can discuss that in a public forum.

Hope this helps!

-Dave

Pingback: Building Blocks of SAN 2.0 — Flash, Thunder & Lightning | Blue Shift

Hi. Just want to let you know that your post made it to the VMware Global Alliances Blog weekly roundup post: http://bit.ly/yiqOi3

Gina,

Thanks! This is my first time making such a prestigious roundup, but hopefully not my last. :-)

-Dave

Pingback: EMC VFCache (aka “Project Lightning”) Is One Small Step, But an Important One – Gestalt IT

Pingback: SolarWinds VMworld party: A great evening with great people : VMblog.com - Virtualization Technology News and Information for Everyone

Pingback: EMC Updates VFCache – Now Supports VMware vMotion | GeekFluent